Replicating Our World through Reality Capture

We can learn a lot about our world by studying the images we capture of our surroundings. Today, Extended Reality has exponentially exploded our capacity to recreate real objects, places, and spaces, in turn creating believable replicas complete with actionable data. But how did we get here?

Earlier this fall we talked about why 3D content is used and where you might find it, but we didn’t dig deep into how 3D digital images are created. Below are a few terms with exploring if you want to peek behind the curtain of a contemporary XR studio workflow.

Lightfields

How often do you think about the light around you? Constantly? Most of the time? As visual creatures, the illumination – or lack thereof – around us is impossible to ignore. So understanding the amount of light that should or should not be replicated to recreate a real space in the digital world is absolutely critical to achieve an authentic experience.

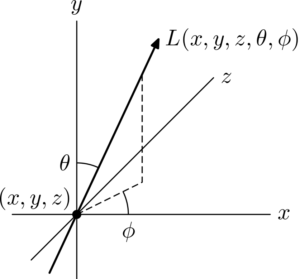

Mathematic equations called functions are used to describe how one variable quantity relies on another variable quantity. Lightfield functions describe the amount of light flowing through a point in space from every direction. Next time you view a 3D visualization or experience a VR space, note the light sources and think of the function below.

Plenoptic Function

Shuttle Oddity Loop

Photogrammetry

The science of using photographs to obtain geographic measurements is a practice nearly as old as photography itself.

Geology, archaeology, and meteorology, have used photogrammetry to accurately plot, plan, and predict respectively. Engineering, architecture – even law enforcement – apply data obtained through photography to complete equations and make decisions. For example, photogrammetry is what allows creation of life-like building visualisations or digital recreation of ancient cities and historical figures.

Once you apply all three dimensions to this process, capturing images from every conceivable angle, you’re able to achieve 3D digital twins. From there, 3D printing is also possible.

In movies and video games, photogrammetry is used to combine live action with computer-generated imagery. So this is essential technology for creating 360 degree video content and Virtual Reality experiences that include any imagery based on real objects and environments.

3D Scanning

Building on principles of photogrammetry, contemporary software enables us to quickly and accurately capture a real object and pull it into a digital world.

Whether we study that object for educational purposes or make it do something surreal for entertainment, we have the power to make it look, sound (and increasingly, to feel) exactly like the original.

Enterprise settings expand on the industries above to include forestry, natural resources, construction sites, and urban planning. The data captured has high value for business intelligence and decision-making purposes.

For Real Estate specifically, products like Matterport Pro2 3D enable anyone to “make immersive digital experiences out of real-world environments.”

3D scanners and cameras are available from consumer models at a price point of around $300 to huge industrial models costing tens of thousands of dollars.

Once a 3D scanner is able to generate a point cloud image, the data can be converted into a mesh to maximize quality in the final visualization.

The increasing versatility and availability of 3D scanning technology means that we’re going to see it in the hands of more people for more purposes than ever in the future.

The range of options at our disposal covers everything from replicating a coffee mug to a recreating mountain … or more.

3D Mapping

Not all 3D scanners use photogrammetry; some technology relies on contact and some uses lasers, such as LiDAR. Like sonar and radar, LiDAR uses waves, capturing information about where and when they bounce back to detect distances and recreate shapes.

The process of 3D mapping with a laser means that we can create incredibly detailed 3D terrain maps for applications like self-driving cars.

Accurate 3D maps combined with infrared sensors can also enable meaningful AR experiences. Addressing key usability issues like occlusion can transform AR into true Mixed Reality, getting us one step closer to achieving digital illusions in the real world.

To see what we mean, watch Pikachu in the Niantic video below. For his presence to seem real, he needs to disappear behind objects like the planters. Passersby need to obscure him as they move forward.

Through accurate Reality Capture, we can even go as far as to produce realistic shadows from digital content. The example below from 6D.AI further illustrates how overcoming occlusion and creating persistent, shareable content takes us one step closer to everyday MR.

Stambol experts are able to give you a little or a lot of technical information when we create your project. Reach out to ask what we can build for you and how we do it.

Feature Image Credit: Jason / Adobe Stock